This Project was created to help my friend write his masters thesis. The first implementation was written in ruby and the second in node.js. The goal was to gather all transactions of non-fungible tokens on the Ethereum blockchain to later be able to calculate profits and losses on a per wallet basis.

They both use the same logic:

- Login to dune.xyz

- Open the queries page

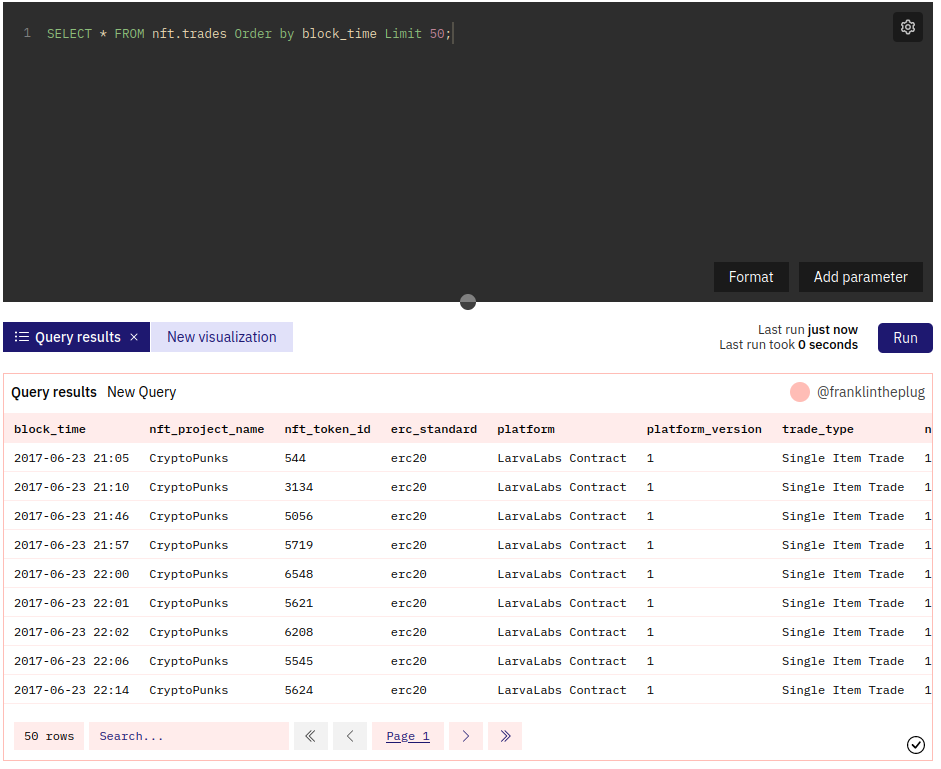

- Formulate an sql query and query the dune database

- Wait for the data to be loaded

- Scrape the data from the returned table

- Save the data to a csv file

- Profit?

As the data was asynchronously loaded the scraper needed to wait for the data to be loaded. The data was presented in a table with 50 rows per page. The scraper needed to also click through every single page and of the result set.

Dune query page

The data had to be scraped in sets of 50.000 entries as querying the database for more would often result in timeouts. Using offset and limit was the optimal solution to page through the underlying dataset.

I chose ruby first because I wanted to write some ruby code to better understand the language and what it offers. As I also knew how to work with html structures in ruby and how to parse them with nokogiri I decided to write the scraper in ruby. At some point the first crawler was working, but my friend still needed the data of 4 million transactions to be able to write the thesis.

So the second implementation was written in node.js. Which turned out to be much faster then the ruby scraper. Here I decided on using Puppeteer over Selenium because research showed that Puppeteer in headless mode is much faster then Selenium.